It takes a one-two punch to make insurance fair.

I’ve previously argued that AI can vanquish bias in insurance, and so it can. But while machine learning can help ensure equality, it cannot ensure equity. It might even make it worse.

Executive Summary

“Equality is about treating everyone the same regardless of their circumstance, while equity is about treating people differently out of regard for their circumstance,” writes Lemonade CEO Daniel Schreiber. Opining that governments—not insurers—need to promote equity, he proposes a system of solidarity taxes and rebates, with tax and rebate percentages applied to insurance base rates determined by regulators to address inequities in insurability and affordability that result from perfect risk-based pricing.This article was originally published by Schreiber on LinkedIn under the title, “AI Doesn’t Do Solidarity.” It is being republished by Carrier Management with permission as part of our “What I Care About” series.

Photo of Dan Schreiber by Ben Kelmer

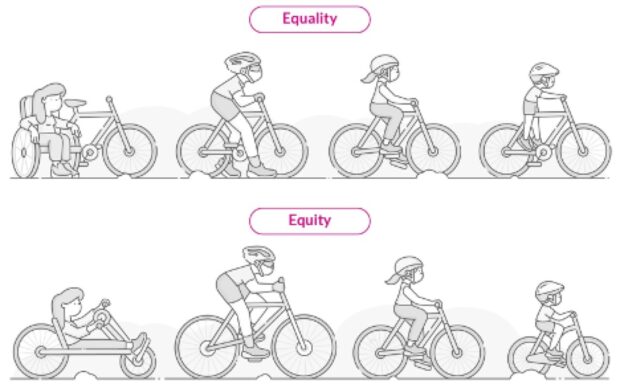

Equality Isn’t Equity

“Equality” and “equity” are often thought of as synonyms, but they are distinct. While both promote fairness, equality is about treating everyone the same regardless of their circumstance, while equity is about treating people differently out of regard for their circumstance.

Equality and equity often pull in opposing directions. Think of the tension between “equal opportunity” and “affirmative action,” for example. And reasonable people may disagree on the optimal mix of the two. What follows is a framework for insurance that, I hope, can be agreeable to all such reasonable people.

Equality

The gist of my first, equality-centric claim, was that insurers should strive for Martin Luther King’s dream of judging everyone by the content of their character, not the color of their skin. I pointed out that as long as the industry relies on few data, insurers have little choice but to group people based on surface similarities—and that’s a problem. Crude groupings create inequality (charging unequal amounts per unit of risk), moral hazard (subsidizing the careless at the expense of the careful) and proxy discrimination (as superficial data often correlate with race, gender or religion).

Under the weight of big data and machine learning, I argued, coarse groupings can be relentlessly shrunk, until—in some ideal future—each person is a “group of one” to be judged by the content of their character; and the fairness of this black-box can be judged by whether or not it produces a uniform loss ratio (ULR) across protected classes. (The argument is explained more fully in my 20-minute presentation to regulators, available on YouTube.)

Mission accomplished? Well, up to a point.

A future where each person contributes to the insurance pool in direct proportion to the risk they represent—rather than the risk represented by a large group of superficially similar people—sounds like a great terminus, but it may not be enough. In fact, without an additional layer of equity, it may not even be a step in the right direction.

Equity

Insurance, ideally conceived, is about a community of people pooling resources to help their weakest members in their hour of need. It’s a social good. But “perfect” pricing can run counter to the social good.

Consider, for example, a baby born with a congenital heart defect. The “risk they represent” for health insurers would be so high as to make them uninsurable. Not a social good.

The same hardship would pop up across insurance lines. Take people raised in historically disadvantaged communities. By dint of birth, a home that is poorly built, poorly maintained and in a high-crime neighborhood may be all they can afford—but they may not be able to afford the home insurance premiums commensurate with these compounding risk factors.

I subscribe to the dictum that “there is no such thing as a bad risk, only a mispriced risk.” But what do you do when precise pricing puts insurance beyond the reach of those who need it most?

The fuzzy pricing of yesteryear took the edge off this problem. When averages are used in lieu of precision underwriting, good risks pay too much, bad risks pay too little, and would-be-uninsurables slip through undetected.

If equality is the unintended victim of paltry data, equity is its unintended beneficiary.

But hoping insurers will forever lack the ability to detect outlying risks is a flimsy basis for solidarity. If inclusion is a mere artefact of shoddy data, it will be squeezed out by big data.

The better way to engineer solidarity is to engineer solidarity. It’s time to formalize an equitable policy in insurance, and this is where governments need to step in.

Golden Rule

Before diving into my proposal, a brief philosophical interlude. What constitutes “fair” has been debated since the dawn of time and involves tradeoffs between values—with liberty and equity often vying for supremacy. Anyone presuming to advocate for a particular policy, therefore, ought to take note of these tradeoffs and be transparent about their position along this continuum.

To wit: MLK formulated what I see as true north for equality, and ethical philosopher John Rawls formulated what I see as the equivalent for equity.

Imagine, says Rawls, if you didn’t know who you would be—what state of health, what state of wealth, what race, what gender etc.—and now formulate what you think are fair rules for society. Behind this veil of ignorance, to use his phrase, formulate a social contract you’ll regard as fair no matter what health, wealth, race or gender you discover when you remove the veil. It’s a thought experiment that’s impossible to implement perfectly—we cannot unknow who we are—but it focuses the mind in ways I find helpful.

MLK challenged us to be color blind when looking at the other; Rawls challenged us to be blind to our own station in life. Both are embodiments of the Golden Rule, a version of which is found in every ethical tradition and which Hillel the Elder formulated as: “What is hateful to you, do not do to the other.”

With this framework in mind, what need we do to ensure our industry is inclusive and fair?

Solidarity

I think it is the role of society at large—through representative government, rather than insurance companies—to ensure everyone has access to essentials such as healthcare, education, housing and safe streets. Indeed, redistributive tax policies allow many countries to finance broad social safety nets that act as a form of insurance for those who need it most. Universal healthcare finances treatments for congenital heart defects, community policing provides for safe streets, and Social Security benefits cover many other basic needs.

It is preferable to tackle equity at a societal level rather than at an industry level; and the more comprehensive a country’s social safety net, the less need there is to regulate insurance-specific interventions.

To be clear, where the welfare state comes up short, insurance regulation—not self-regulation—will be needed.

Here’s why.

Any insurance company that implements its own redistributive policies will be adversely selected into bankruptcy. Charging better risks extra to subsidize policies of the less fortunate will trigger an outflow of good risks and an influx of loss-making ones.

No. If our industry is going to prioritize equity, it has to take the form of a non-discretionary cost of doing business.

In many jurisdictions, premium taxes (usually 2-3 percent of premiums) fund a pool for rescuing faltering insurers. I believe it is time to levy a similar tax to subsidize insurance for low-income families.

The level of this Solidarity Tax and Rebate (STR), as I call it, and how its proceeds are distributed, is something that may vary from one jurisdiction to the next. The broad architecture, though, could be common across the industry:

- Lines: Regulators will designate certain insurance lines—home, disability and life, for example— as basic goods everyone should be able to afford.

- People: Regulators will designate criteria for determining which people are in need of help affording them. In the U.S., for example, eligibility for Medicaid—or some other entitlement program—may be a suitable proxy. Over time, more involved criteria and sliding scales may make sense.

- Tax and Rebate: When insurers submit rates to regulators, they should include a column for the STR. In most cases this will mean adding the Solidarity Tax (say 3 percent) to the base rate. But when writing designated lines (per 1 above) for designated people (per 2 above), they may submit for regulatory approval a lower Solidarity Tax, no Solidarity Tax or a Solidarity Rebate. In extreme cases—such as the baby born with a congenital heart defect—I’d hope that the Solidarity Rebate could approach 100 percent. (Rates aren’t submitted to regulators in many jurisdictions outside the U.S., where alternative oversight mechanisms—or comprehensive criteria and sliding scales—can substitute for this.)

Some insurers, like those targeting high-net-worth individuals, will be net contributors to the STR pool. Others, like those targeting low-income communities, may be net beneficiaries. This is as it should be and will encourage insurers to offer essential lines at affordable prices to those most in need. In any event, regulators should endeavor to balance the STR inflows and outflows each year.

(Editor’s Note: Schreiber confirmed that the idea here is to have state regulators determine the Solidarity Tax rate level to be applied to base rates for qualifying lines of insurance as well as the groups that would qualify for rebates. And as the insurer collects premium for each risk, it would collect the tax on STR-surcharged individuals, remitting those to the state SLR pool; and it would draw from the state pool or retain a portion of the STR tax revenue for the risks that qualify for rebates.)

The Land of the Blind

Taken together, ULR and STR provide a powerful one-two punch. The ULR ensures there is no discrimination in pricing or claims, while the STR augments this color-blindness with a layer of solidarity and compassion. (Technical note: STR is a deviation from pure equality, which is why the ULR test needs to be run net of STR.)

Given that levels of the Solidarity Tax and criteria for the Solidarity Rebate are left to the regulators, each jurisdiction can localize the STR framework in light of their pre-existing safety-net and position along the liberty-equity continuum.

The upshot is a system that allows insurers and regulators to balance equality and equity. It enables us to harness big data and artificial intelligence to deliver hitherto unknown levels of equality in insurance while harnessing human solidarity to deliver hitherto unknown levels of equity in the availability and affordability of insurance.

As MLK and John Rawls taught us, when we’re blind to the surface characteristics of others, and blind to our own particulars, we begin to see beyond our biases. I hope ULR and STR are steps in this direction, in the vein of Tertullian’s vision from two thousand years ago: “Two kinds of blindness are easily combined so that those who do not see really appear to see what is not!”

Daniel Schreiber, Lemonade

Daniel Schreiber, Lemonade

Premium Slowdown, Inflation Factors to Lead to Higher P/C Combined Ratio: AM Best

Premium Slowdown, Inflation Factors to Lead to Higher P/C Combined Ratio: AM Best  State Farm Inked $1.5B Underwriting Profit for 2025; HO Loss Persists

State Farm Inked $1.5B Underwriting Profit for 2025; HO Loss Persists  Large Scale Cargo Ring Busted in LA, $5M Recovered

Large Scale Cargo Ring Busted in LA, $5M Recovered  Beyond Automation: The Emerging Role for Contextual AI in Insurance

Beyond Automation: The Emerging Role for Contextual AI in Insurance